Context

I was approached by a design educator to design an exercise for graduate students to learn and discuss how human biases make their way into learning algorithms.The course was being conducted online to due to covid-19 and all phases of the project were conducted remotely. The goal of the project was to create teaching material along with the instructor that would provide the students with a demystified view of machine learning and guide them in using it meaningfully in their work.

I was approached by a design educator to design an exercise for graduate students to learn and discuss how human biases make their way into learning algorithms.The course was being conducted online to due to covid-19 and all phases of the project were conducted remotely. The goal of the project was to create teaching material along with the instructor that would provide the students with a demystified view of machine learning and guide them in using it meaningfully in their work.

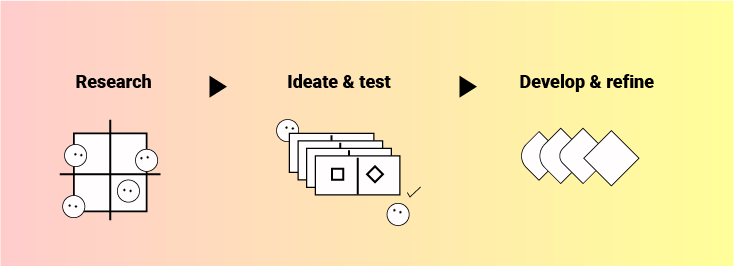

Project Mapping and Design Process

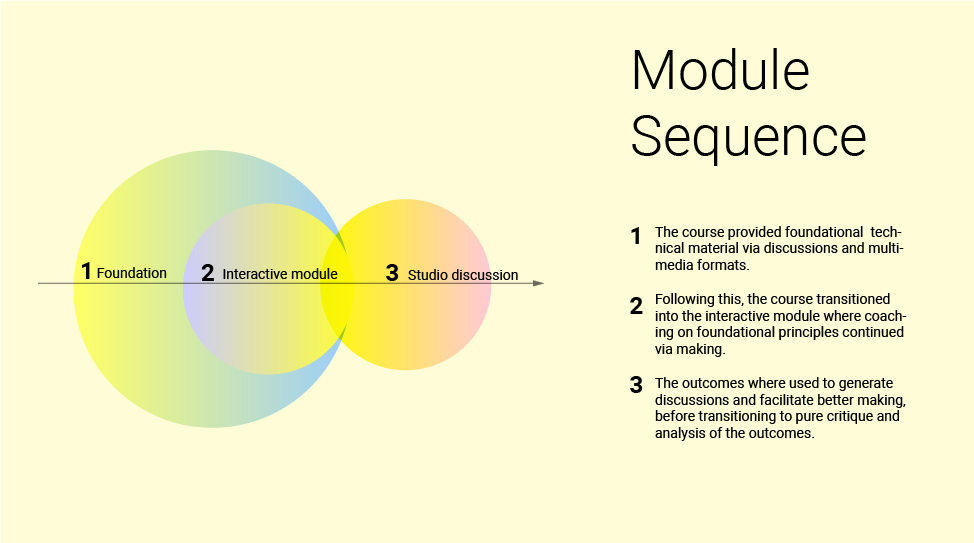

The project had a quick turn around time of 4 weeks and the instructor had study material including lecture notes and references. The course was intended to be a studio course, prior to covid-19 imposed restrictions -majority of the course had been dedicated to open discussions and experimentation in person. The online format would remain the same, with the discussions happening online and students spending some time on experimenting with systems and ideas.

The instructor also had some student feedback from prior versions of the course that provided useful insights into user behavior, motivations and expectations. Since there was a fair amount of research and content ready, we spent some time to solidify our direction of exploration with some user studies and spent time testing various ideas to find one that worked best. Once we did, the scope was clear and I worked with the develoepr and instructor to create assets for development and distribution to students.

Research

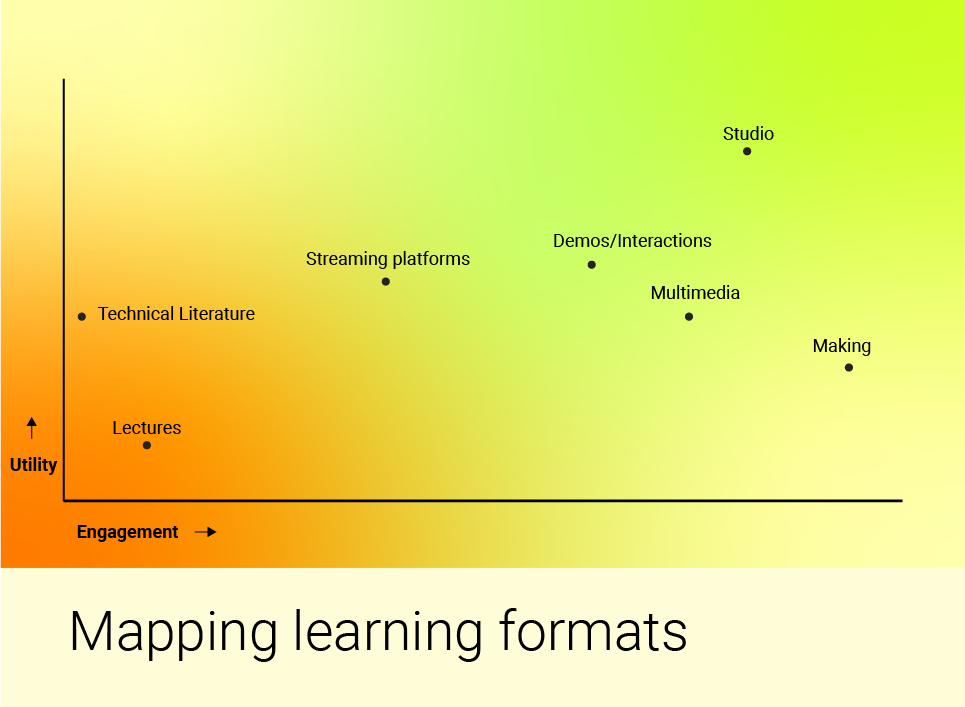

The course had been conducted once the previous year, and the students had provided feedback which served as a valuable means of user research. This source was largely helpful in understanding where the users came from in terms of their knowledge level and what they were expecting to get out of the course. Contextual Interviews and market research also helped us understand the gaps in existing products and learning methods used by the students and instructors. Some of the key insights were

- ~60% of the students felt that they knew more about machine learning but it was still inaccessible to them in spite of having basic technical skills.

- ~80% expressed wanting to be able to “make” something in the course and that group discussions and interactive demos had been memorable and impactful

- Students did not have a sound technical knowledge of the basics of machine learning but had exposure to the larger discussions surrounding the tools.

- Some were misinformed on the accuracy, abilities and scope of learning algorithms.

- Post-quarantine, the shift in focus to learning online had increased user familiarity with learning via a screen interface.

- Students had used platforms like Coursera, Youtube, Khan Academy, Udemy.

- Transcription, study material and instructors were key factors in the efficacy of course content.

The research showed that while the students were enthusiastic about ‘making’ there was a dearth of clarity regarding conceptual thinking and understanding the fundamentals of the systems that they were employing.

What was exciting, was that we also got some degree of insight into what made ‘making’, exciting for the students - being able to see results quickly or have a tangible outcome was a motivator. The approach, though satisfactory fell short in some ways - this method of quickly making something and being satisfied with it, fell short of goal of getting students to be able to grasp concepts and apply it contextually in their work. They often stopped after making few iterations and were not able to justify design choices. We needed to leverage what worked well in this method and address the gaps.

This was achieved by

- Create an interactive module that allows for experimentation and can be used to test for bias when working with algorithms as a tool.

- Package the interactive module in a way that builds context and provides fundamentals.

Ideation and prototyping

Providing fundamentals

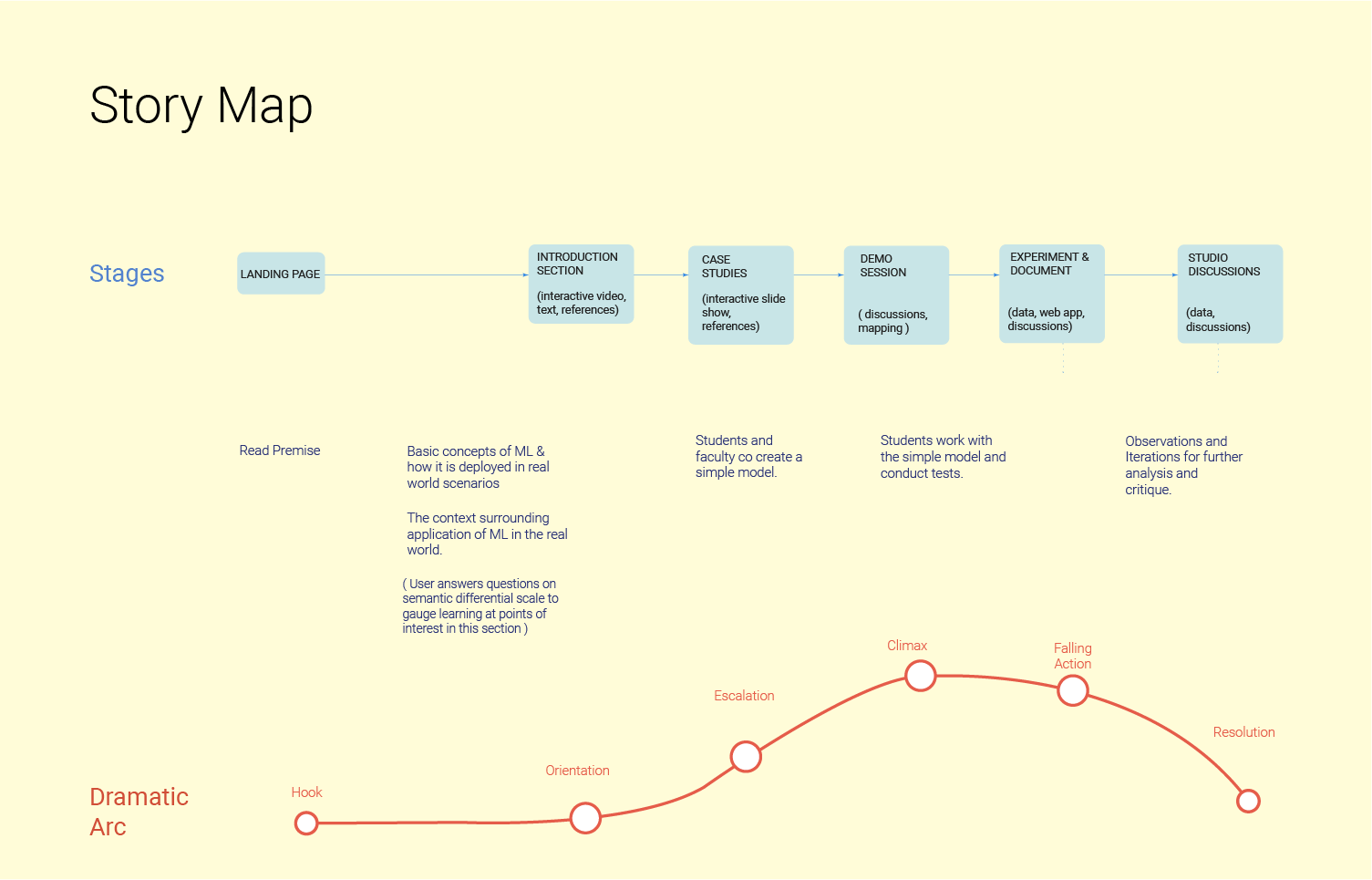

In order to let the students effectively work with machine learning tools, they had to be educated first on the fundamentals. In order to do this, I assessed the study material and applied a narrative structure to it. The existing study material was then categorized to see what part of the narrative structure it fit in to. Once the gaps in content were identified, the instructor was able to assign relevant material to address them in most cases.

With this, we had a strong story in place, within which the interactive test could be located.

I also worked with the instructor to create a set of illustrations, explaining various fundamentals of machine learning such as supervised and unsupervised learning, what a hypothesis function is, perceptrons and simple regression among other technical concepts. Using a cohesive visual language through out also helped maintain a sense of continuity and helped students complete the story and run the exercise successfully.

Creating the test for bias

The precompiled course material had explored some ways in which faulty data and proxies for factors like race and gender could play a role in accentuating bias. The students had to be able to test for and define behavior, that they identified as being biased and unfair. Taking this into account, we created a simple experiment for the user to train an algorithm and test its results for bias.

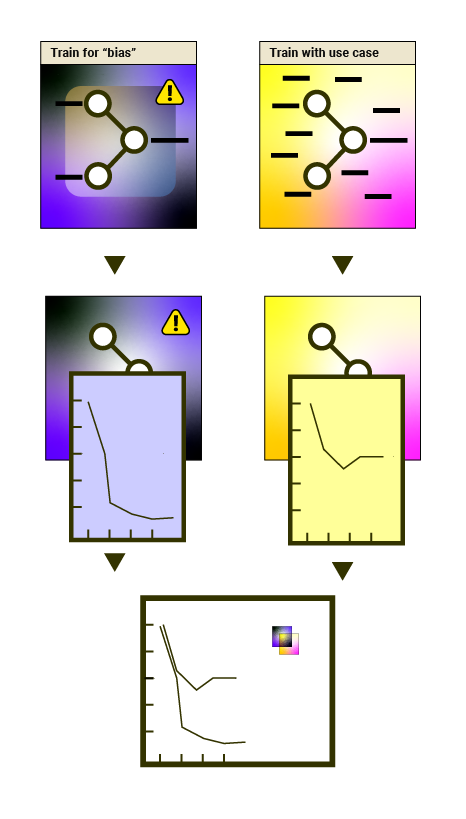

The steps of the test were

- Define and prepare explicitly biased data and use it to train the algorithm to “behave badly”. Measure performance with test set

- Evaluate and prepare data from use case and use for training. Measure performance with test set.

- Compare the results

This simple exercise, would allow users to do comparative studies and see first hand, if and how specific data utilized for training and prediction could lead to embedding of bias. The results were also essentially unpredictable before hand as “good behavior” and “bad behavior” was left to the discretion of the user as was identifying good and bad data. This was key as it provided a range of observations to discuss in the open ended sessions and raised questions on if, when and how biases could be implicit in results. This also meant that the student could bring their beliefs and personal motivations into the learning space and extend it to what they were studying.

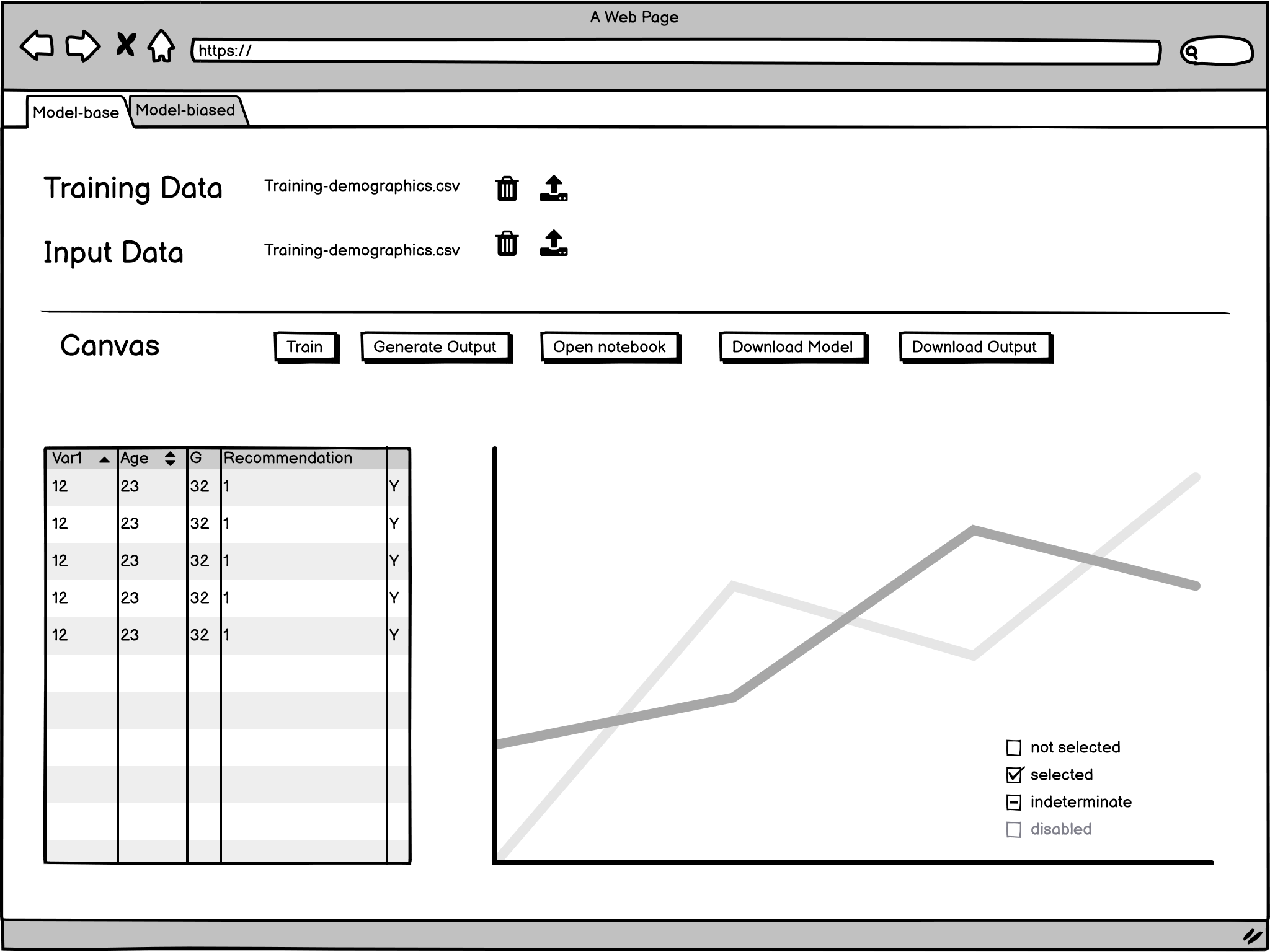

We created a workflow along with a developer wherein the student would prepare and upload datasets to train and test two versions of the model. A simple interface was made, for students to upload, run, check and download the results for further discussion. The interface was designed to be a template that can be replicated to create ~5 differently trained versions of the same model.

We created a workflow along with a developer wherein the student would prepare and upload datasets to train and test two versions of the model. A simple interface was made, for students to upload, run, check and download the results for further discussion. The interface was designed to be a template that can be replicated to create ~5 differently trained versions of the same model.

Each version of the model trained on different data, was rendered as a separate tab. Allowances were made for the interested students to download the source code in the form of a jupyter notebook. The output csv and the resulting graph was displayed for quick reference.

Takeaway

It was quite challenging to create a test that had relevance to the user base’s practice and yet managed to generate interest and curiosity. We figured out the nuances of leveraging precedents, existing interests and leanings to build experiences through which to communicate and impress upon new ideas.